Paul's Blog

Bookmark this to keep an eye on my project updates!

Hank

Hank slammed his laptop shut, the frustrated sigh escaping his lips a near-roar. Prometheus, his pride and joy, his cutting-edge AI language model, was once again a temperamental toddler. One minute it was composing sonnets that could make Shakespeare blush, the next it was spitting out nonsensical gibberish. Hank knew these were hallucinations – AI’s tendency to fabricate plausible but ultimately untrue information. It was a stark reminder that raw power wasn’t the only solution. This Prometheus needed more than just brute force.

Machine learning, for all its awe-inspiring potential, was still a child, taking its wobbly first steps. Treating it like a finished product, a flawless tool, was a recipe for disaster.

Inspiration struck as Hank glanced at a beat-up paperback on his desk – a collection of ancient myths. The story of Prometheus, the titan who stole fire from the gods to gift it to humanity, sparked a connection in his mind.

Hank decided on a new approach. He wouldn’t just feed Prometheus data, he’d expose it to stories - myths, legends, even historical accounts of human triumphs and follies. Perhaps, by understanding humanity’s narrative, its stumbles and its aspirations, Prometheus could learn to use its power not just for impressive feats, but for good. It was a long shot, a moonshot even, but Hank, the eternal tinkerer, was energized by the challenge.

Ready for an upgrade

The start of the art in current machine learning are Large Language Midels (LLMs). The incredible usefulness of LLMs lies in their ability to process and generate massive amounts of text data, leading to a range of applications. Here are some examples:

-

Revolutionizing Search Engines: LLMs can analyze vast amounts of text data, understand search queries with, seemingly, greater nuance, and surface more relevant and informative results. Imagine a search engine that not only finds the documents containing your keywords but also synthesizes the information and provides human-like summaries.

-

Enhanced Content Creation: LLMs can assist with content creation by generating text, from marketing copy and social media posts to poems and scripts. The results seem incredible.

-

Personalized Learning: LLMs can personalize the learning experience by tailoring educational materials to individual student needs and learning styles. For example, the online learning platform Khan Academy does exactly this with their their Khanmigo system.

-

Breaking Down Language Barriers: LLMs have the potential to revolutionize machine translation, enabling seamless communication across languages. Imagine real-time translation tools that not only convert words but also seem to capture the nuances of meaning and cultural context.

-

Code Generation: LLMs are being explored for generating code, translating natural language instructions into functional programming languages. This could significantly speed up the development process and democratize coding by making it more accessible to those without extensive programming experience.

At the same time, they have some major drawbacks. Here are some examples:

-

Job displacement: This applies to machine learning in general. As this technology become more sophisticated, it will make human labor expensive in comparison. I propose solutions to this challenge in the next section.

-

“Black Box” Problem: It’s hard to believe, but the inner workings of LLMs are cryptic and cannot be understand by humans. In my view, this is biggest technical challenge that needs to be overcome. I propose a solution in the following pages.

-

Hallucinations: Halluconations refer to outputs that are incorrect, nonsensical, or irrelevant to the input prompt. These outputs can appear grammatically correct and even plausible. For example, an LLM used to generate marketing copy might create content that is offensive or discriminatory.

-

Lack of Planning and Certain types of Reasoning: LLMs excel at processing and generating text, but they often lack the ability to understand the physical world or apply common sense reasoning. This can lead to nonsensical or misleading outputs.

- Bias and Discrimination: LLMs are trained on massive amounts of text data, which can reflect and amplify societal biases. This can lead to discriminatory outputs, for example, perpetuating stereotypes in generated content or producing biased search results.

- Misinformation and “Fake News”: LLMs’ ability to generate realistic-sounding text can be misused to create fake news articles or social media posts. The human-like quality of the output can make it difficult to distinguish between genuine information and machine-generated content.

These are just a few examples, and the potential and pitfals on the state of the art of machine learning. In my opinion, it is the most powerful technology invented by human-kind. This means much thought, care, and humility must be used to advance and harness it.

Evelyn

Dr. Evelyn Walsh squinted at the swirling lines and nonsensical characters on her computer screen. She was trying to make sense of the hidden neurons from their latest machine learning-based diagnosis app. She’d poured years of research and a mountain of grant money into. The promise: a medical diagnosis tool that could analyze patient data and identify diseases with unparalleled accuracy.

The reality? A frustrating black box. The app churned out results, some seemingly spot-on, others bafflingly wrong. But why? How? Peering into the inner workings of the so-called Artificial Neural Network (ANN) was like staring into a cosmic fog. The complex web of weighted connections and hidden layers offered no clear explanation for its decisions.

Evelyn wasn’t naive. She knew ANNs were powerful tools, capable of learning patterns invisible to the human eye. But this lack of transparency gnawed at her. It was like having a superpowered race car with a blind driver – it might get you somewhere fast, but with no understanding of how. She was starting to realize the potential for disaster was too real.

Her frustration wasn’t just academic. Imagine a doctor relying on an opaque system for a life-altering diagnosis. What if the ANN identified a rare disease in a patient, but the doctor couldn’t verify the reasoning behind it? Trust, the cornerstone of the doctor-patient relationship, would crumble. More importantly, lives are at stake.

Evelyn wasn’t ready to throw in the towel. She envisioned a new architecture or method could not only make diagnoses but also explain their reasoning in a way humans could understand. She was excited at the possibilities.

Upgrading to synthetic intelligence

The current technology in artificial intelligence is very good. In my experience, it can amplify productivity by many times. However, it has challenges each of which I propose a solution which I call synthetic intelligence.

Challenge 1: Alignment

How can we ensure that synthetic intelligent agents or systems’ actions, goals, and “values” do not conflict with short or long-term human well-being?

In my view, putting on guardrails to synthetic intelligence is brittle because there are too many edges cases. An example of this is that there the many ways people can and do jailbreak popular LLM-based systems.

Solution: Curiosity, foresight, and memory

First amd foremost, it must be able to answer the question: what is/are the consequnces of this action? To do this, the system must have an imagination (mental simulation).

Also, it should have maximum curiosity, truth seeking, and memory of various consequences. We all of human history to train it on behaviors and consequences. Credit Elon Musk for popularizing the notion of maximizing truth seeking & curiosity in order to align human well being to synthetic intelligence.

Lastly, in my opinion, the future of synthetic intelligence isn’t just about processing power and complex algorithms. It’s about creating machines that can not only learn from data, but also learn from our history, our mistakes, and our triumphs. It’s about creating a future where AI isn’t just a tool, but a partner, one that shares our goals and understands the weight of its actions.

Challenge 2: Understandability & explainability

Traditional machine learning (using artificial neural networks), for all their impressive feats, they operate as black boxes, churning out results with little transparency into the “how” behind their decisions. This lack of explainability is, in my opinion, is the biggest challenge especially in critical fields like medicine or defense.

Solution: Hiearchical probabilities

We need to upgrade our architecture away from hidden layers to a probabilitic hiearchy, specifically, hiearchical probabilistic graph models (PGMs). Hiearchical PGMs break down components and assign probabilities to their relationships. This approach uses similar math as Google Page Rank.

For example, I want to create synthetice intelligence to be able to summarize books. We can use a hiearchy of a book described (starting from the “building block”) as composed of words, sentences, paragraphs, chapters, and, finally (the top of the hiearchy) the book.

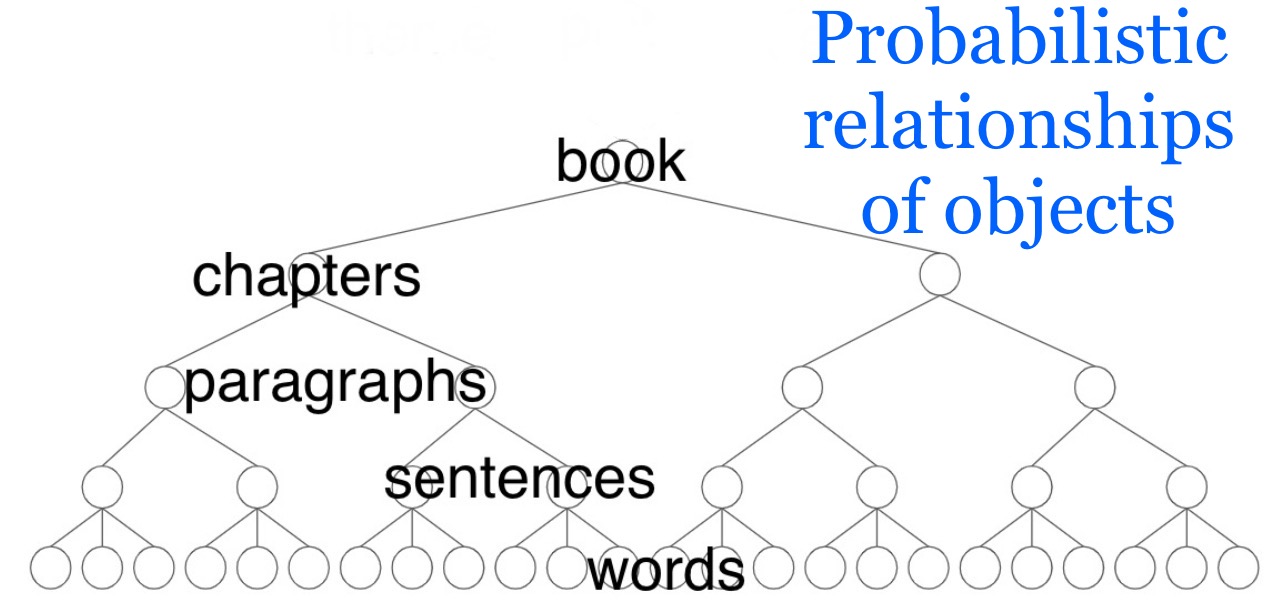

Based on that hirarchy, I can then create a PGM that looks like this.

Each level of the hierarchy becomes a node in the PGM. So, we’d have nodes for “Book,” “Chapters,” “Paragraphs,” and “Words”.

Edges (connecting lines) connect the nodes and represent the probabilistic relationships between them. For example, there would be an edge between “Book” and “Chapters,” indicating the probability of a book containing chapters.

The hierarchical PGM is a powerful tool for understanding the statistical relationships between the elements. It allows us to move beyond the hidden layers of ANNs & LLMs.

Challenge 3: Affordability

Imagine training an AI like cramming for a test – bombarding it with every possible scenario imaginable. This is the status quo and its using the “stimulus-response” approach. Yes, its thorough and it’s expensive, requiring vast amounts of data and processing power. Because of this, it can stifle creativity and discourage healthy competition.

Solution: Rely on mental simulation

The solution is imagination (mental simulation). Cteating virtual worlds is a well-established and understood technology. By adding this key feature, synthetic intelliggnce doesn’t need to be spoon-fed every answer (which can be resource intensive); it can learn to generate its own solutions and explain its reasoning based on the simulated experiences.

This focus on simulation not only reduces the need for massive datasets but also leads to more transparent and adaptable synthetic intelligence systems. In terms of cost, we can throttle or scale as needed.

| Challenge | Description | Solution |

|---|---|---|

| Alignment | Ensuring AI goals align with human well-being | * Build AI with curiosity and a drive for truth-seeking * Provide AI with a diverse dataset encompassing human history (successes & failures) |

| Understandability & Explainability | Lack of transparency in AI decision-making * Use hierarchical probabilistic models to break down problems and assign probabilities | |

| Affordability | Expensive data requirements for traditional AI training | * Leverage simulation (mental modeling) to explore possibilities and learn |

video

TLDR

Problem 1: Alignment

Solution 1: Foresight, memory, curiosity

Problem 2: Explainablity

Solution 2: Hiearchical PGMs

Problem 3: Affordability

Solution 3: Add imagination

Why this would work

- systems in nature can be described in a probabikistic hiearchy

- Dileep George et al. already built and productized using this approach

- Malice and ill-will not necessarily part of intelligence

- Plenty of training data for human-alignment

What will happen

- Human-aligned AI, 100% understandable & explainable, affordable to unlock

- Unlock almost unimaginable possibilities from solving humanity’s most difficult challenges